Your Guide to Cloud File Storage Primer

The cloud has revolutionized on-demand computing and hosting for a number of workloads. A number of organizations have seen benefits in converting IT spend from CAPEX to OPEX in addition to having highly scalable infrastructure for major traffic events. But as organizations began migrating their technology stack to the cloud, they found themselves disappointed with the limited file storage available to them on cloud compute nodes.

Cloud file storage solves the problem of limited file storage by delivering a virtualized equivalent to the on-premises network-attached storage (NAS) system. Servers and applications can call on this virtualized storage, where users can create, read, update, and delete files. Below are some reasons why you may want to consider using this type of cloud storage.

Cloud File Systems are Scalable

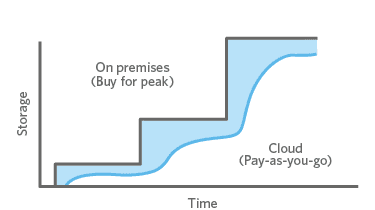

The first advantage is that cloud file systems are highly scalable. Have a spike in usage? Cloud file systems can instantly ramp up the allotted space without having to take time to purchase and provision hard drives to a NAS. And rather than having to plan for the peak and order excess storage, many cloud file systems can provide the exact space you’re currently using by dynamically scaling up and down based on usage.

Cloud File Systems are More Efficient for Budgets

For cloud file system vendors that enable you to bring online the exact storage you need, you won’t pay for what you don’t use. Consider a on-premises hosting scenario in which only 1 PB of data is currently stored, however, it’s been forecasted that within six months you’ll need 10 PB of storage. Your on-site NAS must be configured for that 10 PB scenario even though 90% of the space will go unused today. Many cloud file systems are different—you will pay only for what you’re using today. You won’t have to buy for the peak and you won’t be stuck with a bunch of gear if the storage requirements subside. It is worth noting, however, that this is not indicative for all cloud file systems, as will be discussed later.

Cloud File Systems are Built on Standard Protocols

The established standards used to communicate with your on-premises NAS are also used with cloud file systems, such as Network File Systems (NFS), Common Internet File System (CIFS), and Server Message Block (SMB). These protocols are familiar to IT professionals and applications can almost begin calling on them. Using standard protocols is a major difference between cloud file storage and cloud object storage, where in the latter developers have to incorporate a cloud provider’s API into the application to store objects. Although cloud object storage may make sense for cloud-native applications, cloud file systems tend to work better for those workloads that have been ported over to the cloud.

Cloud file storage has been found to effectively provide virtualized storage for the following use cases:

- Online web content

- Media processing

- Containers

- Big data analytics

- Database backups

- Home directories

Cloud file storage can either be managed by the cloud provider, who provides all the patching, updates, and troubleshooting, or it can remain unmanaged, which means the responsibility for management activities falls on your team. Other variables with cloud file storage include the cost per gigabyte, latency, geographic availability, and whether the cloud vendor has a cloud file system backup available.

Highlighted below are 3 public cloud file storage offerings that all have unique attributes.

AWS Elastic File System (EFS)

AWS EFS is a fully managed cloud file system that uses the NFSv4 protocol. It can provide connections between AWS EC2 compute nodes as well as on-premises servers. For peak performance, AWS EFS can support over 10 GB/sec and more than 500,000 IOPS. At the time of writing, 22 of 23 AWS locations across the globe provide access to AWS EFS (the only exception was the Asia Pacific (Osaka-Local) region). AWS EFS dynamically scales and does not depend on an administrator to provision storage space in advance, and they offer the ability to back up your cloud file system automatically and incrementally by using the AWS Backup tool. AWS EFS has a tight integration with Amazon Kubernetes Service container technology along with AWS Fargate and AWS Lamba serverless technologies.

AWS offers two different pricing tiers for this storage based on whether the files are actively or infrequently used.

Azure Files

Azure Files is an on-demand cloud native file system that uses SMB, NFS, and FileREST protocols. You mount Azure Files in the cloud or on-premises deployments of Windows, Linux, and MacOS. They also provide a Storage Migration Service and Azure File Sync options for companies that use on-premises Window File Server hosting technology to quickly get their files to the cloud without breaking existing links. If your business already uses Azure Kubernetes Service as its container technology, Azure Files has a tight integration with that service.

The pricing for Azure Files services is based on a number of variables, which sets it apart from AWS EFS. The first such variable is the type of redundancy strategy that Azure Files uses:

- Locally Redundant Storage (LRS)

- Zone-Redundant Storage (ZRS)

- Geo-Redundant Storage (GRS)

- Geo-Zone Redundant Storage (GZRS)

- Read-Access Geo-Redundant Storage (RA GRS)

The second variable is the type of speed needed to access the files, which are placed on a continuum of high performance to infrequently used:

- Premium: high I/O workloads done on solid-state drives (SSDs)

- Transaction optimized: heavy workloads that can accommodate more latency

- Hot: general-purpose file storage

- Cool: the most cost-effective solution for archive file storage

Finally, the amount of data stored and the specific kind of data transaction all affect the final pricing. In short, although Azure Files pricing may be more convoluted than AWS EFS, administrators have the ability to dial in exactly what they want in a cloud file system. At the time of writing, Azure Files is available across 46 data centers across the globe and is capable of having bursts of up to 100,000 IOPS with their premium storage option.

Google Filestore

Google Filestore is a fully managed (what they call NoOps) cloud file system that uses the NFSv3 protocol. Google Filestore is a cloud file system that only works with either the Google Cloud Platform (GCP), applications running on Google’s Infrastructure-as-a-Service (IaaS) platform Compute Engine, or Google Kubernetes Engine Clusters.

As opposed to AWS EFS and Azure Files, the Google Filestore behaves more like a NAS in both scalability and pricing in that you are provisioning—and paying for—a drive of a specific size. For example, if you were to deploy the minimum 1 TB worth of cloud file storage, you are paying for that full terabyte, regardless of whether you are only consuming 100 GB of storage or all of it. Additionally, to scale that 1 TB of Google Filestore storage, it appears that an administrator would have to increase or decrease the cloud storage via the Google Cloud Console, command line, or API.

With respect to pricing, Google Filestore has three different tiers: Basic HDD, Basic SSD, and High Scale SSD. Each tier requires a minimum commitment of storage, which is 1 TB, 2.5 TB, and 60 TB, respectively. Given that you are paying for the entire amount of storage provisioned, this particular solution seems to make sense primarily for GCP workloads with high storage requirements. Google Filestore is available in 24 locations around the globe.

Solving the challenges of cloud-based file storage

With so many cloud storage options, it’s understandable why many businesses may be concerned with taking their file system to the cloud. Apprehensions about vendor lock-in, cybersecurity threats to cloud platforms, regulatory compliance, or storing multiple out-of-sync copies of data are enough to give even the most seasoned IT professional pause.

The options presented above have many advantages to accessing and scaling data in the cloud, but as cloud services expand and new innovative technologies are released by hyperscale providers (seemingly on a daily basis), you may find yourself limited to the level of innovation you can achieve when your data is locked into a single provider.

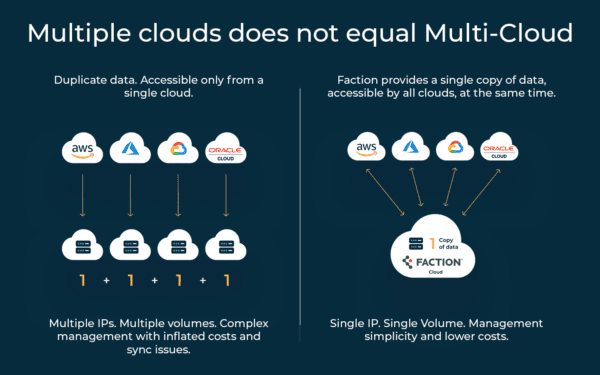

The alternative option is a true multi-cloud approach, which abstracts your data from the native cloud storage location, but maintains its accessibility to all cloud services, apps, and cloud VMs at the same time. This model is unique in the way that you are now freed from the shackles of a single cloud lock-in scenario, providing the ability to use the best and latest service from any public cloud provider as your business demands change.

Enter Multi-Cloud Data Services.

In addition to storing your data in a cloud’s native file storage, there are also 3rd party vendors that offer the ability to keep your data outside of the cloud while still using the cloud services to access and use the data. Unlike these vendors that allow you to attach your external file storage to a single cloud at a time, Faction’s Cloud Control Volume (CCV) solution allows you to access your data from all public clouds (at the same time). This method eliminates the need to duplicate data across clouds, saving you costs and management complexity. So beware as you research other 3rd party solutions out there, multiple clouds does not equal multi-cloud.

There are of course ways to do this yourself by hosting a storage appliance in a 3rd party data center and then connecting it to public cloud via WAN links or data center provider solutions, but you would be saddled with CapEx costs, the complexity, and time of managing the infrastructure and connectivity to public clouds, as well as having to negotiate and sign numerous contracts with data center providers and network vendors. In this scenario, you would also have to have a long-term commitment to the throughput between your data and the public cloud, and you would also be limited to one cloud at a time.

With Faction your data is accessed via NFS and is presented as a single volume, single storage namespace, and single IP/subnet – with throughput up to 2 Tb/s shared across public clouds. The connectivity to multi-cloud is all-inclusive in the service and is managed, deployed, and enabled by Faction Internetwork Exchange, our patented solution. Speed of access to your data from the cloud is “fungible”, meaning you can dynamically allocate the throughput you need to each cloud based on your application requirements.

The all-inclusive service is purchased on a monthly basis and can be expanded in 1 TB increments to grow with your business and data needs.

We have seen customers leverage this solution for groundbreaking use cases like genomics, massively parallel processing (MPP) data warehouses, data analytics, or simply as secondary storage. The possibilities are unbounded once you unleash the power of a multi-cloud approach.

Faction Can Help Achieve your Multi-Cloud Data Strategy

If you’re ready to take advantage of a multi-cloud strategy, Faction is ready to be your partner. You can tune into our on-demand webinars to learn more about the benefits of multi-cloud storage and how to unlock the power of data availability in the multi-cloud, or you can contact us now to learn more about how we can help your business!